WSL2 环境下配置CUDA和CUDNN并部署TensorRT

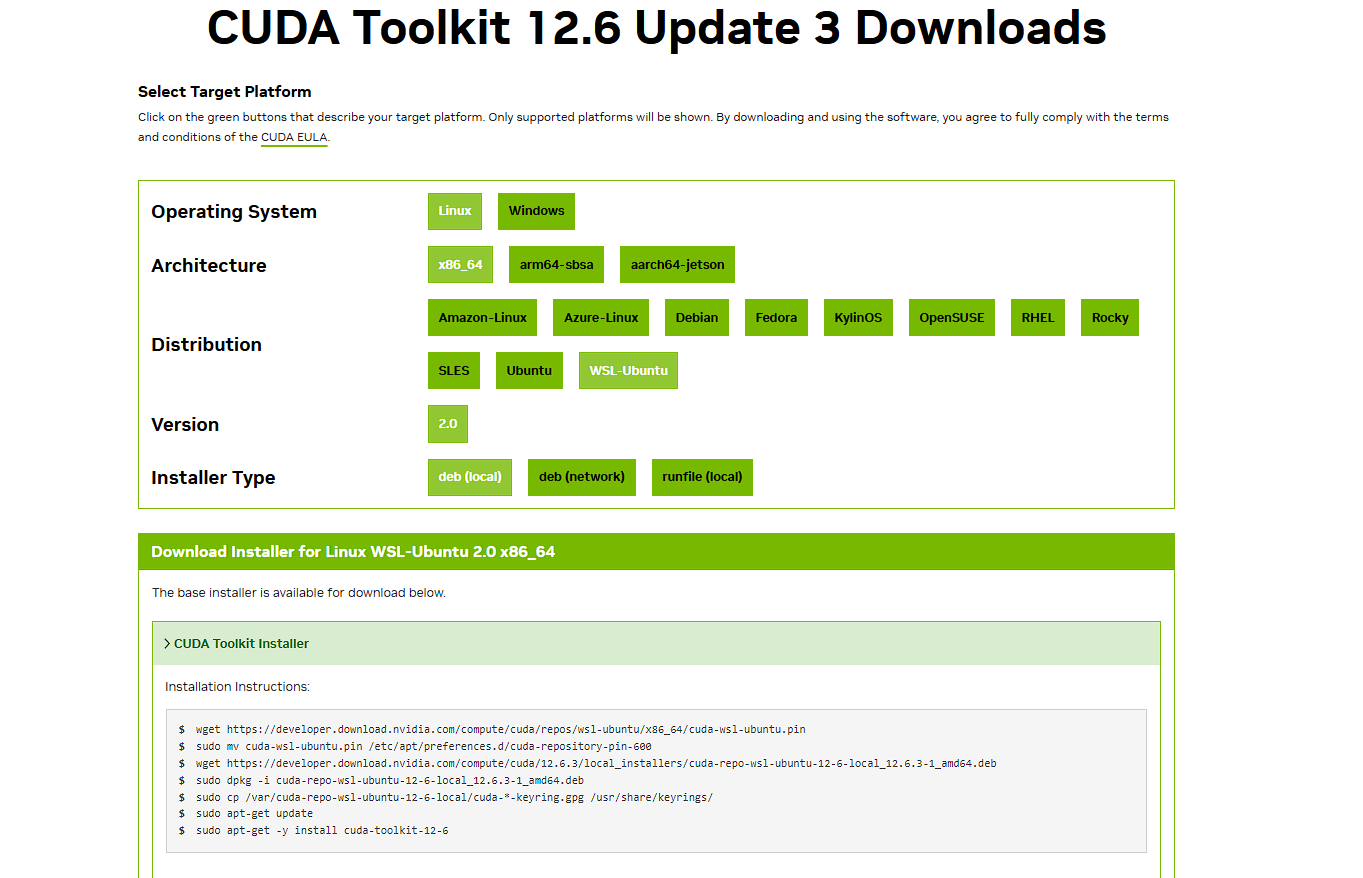

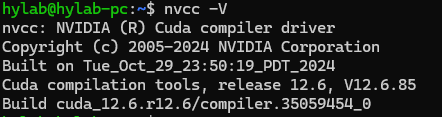

CUDA

1 | # 根据英伟达CUDA下方提示命令复制执行 |

配置完成

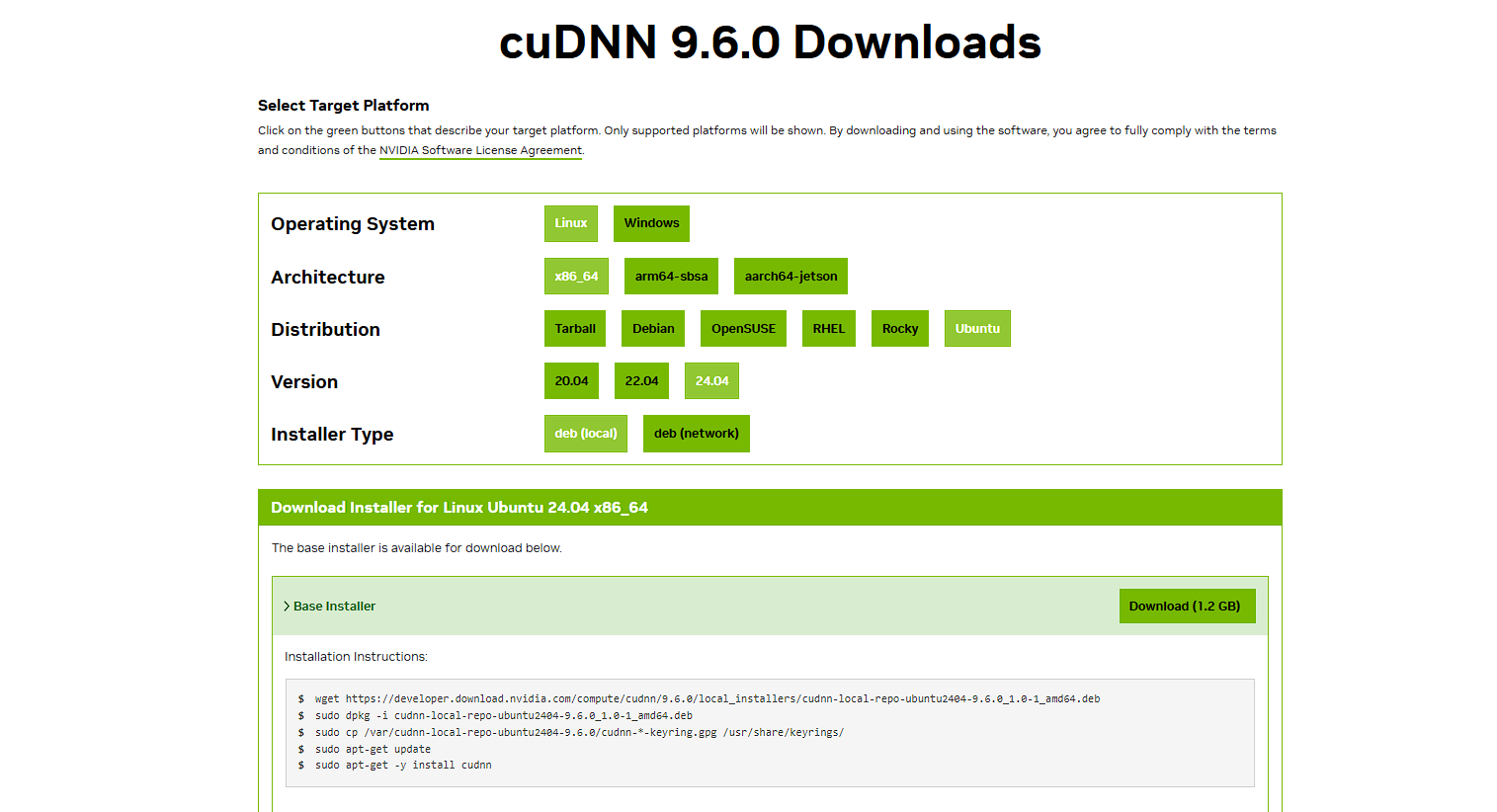

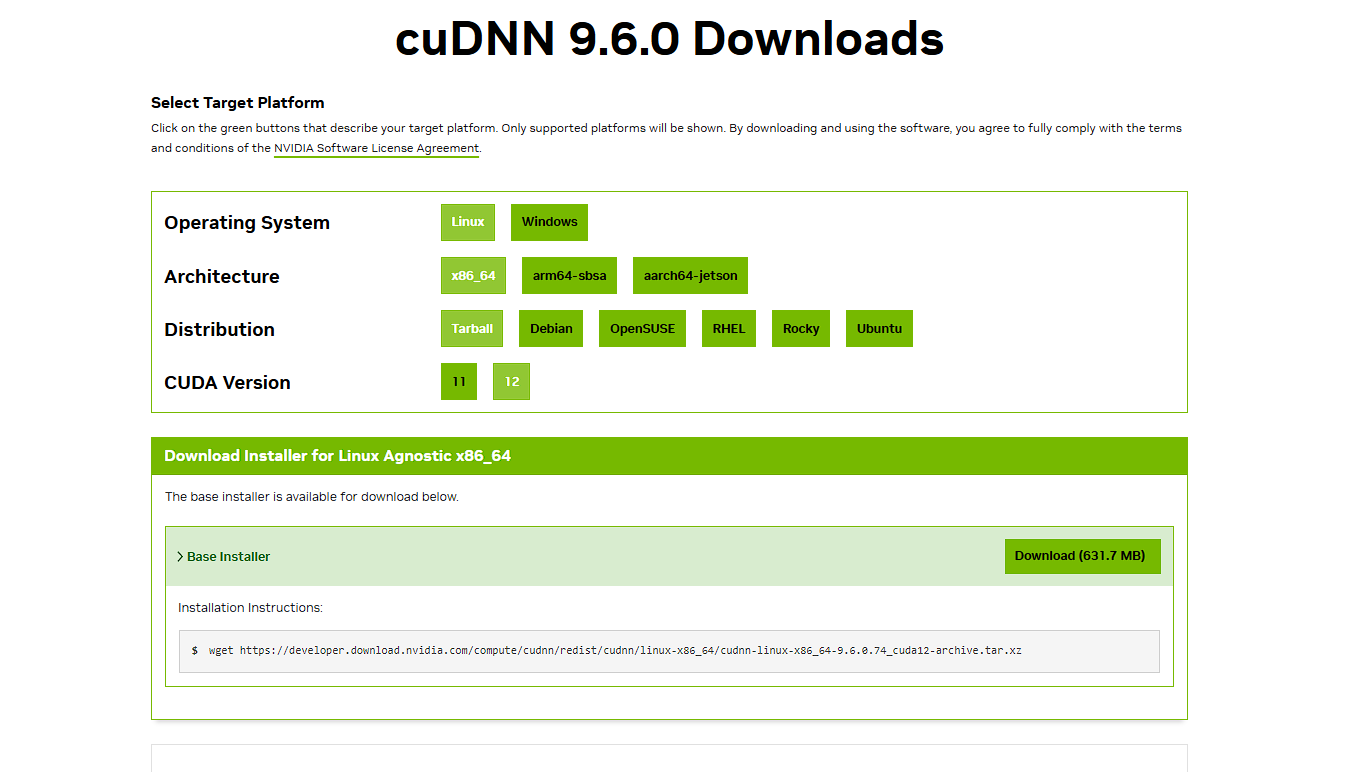

CUDNN

1 | # 根据英伟达CUDA下方提示命令复制执行 |

配置完成

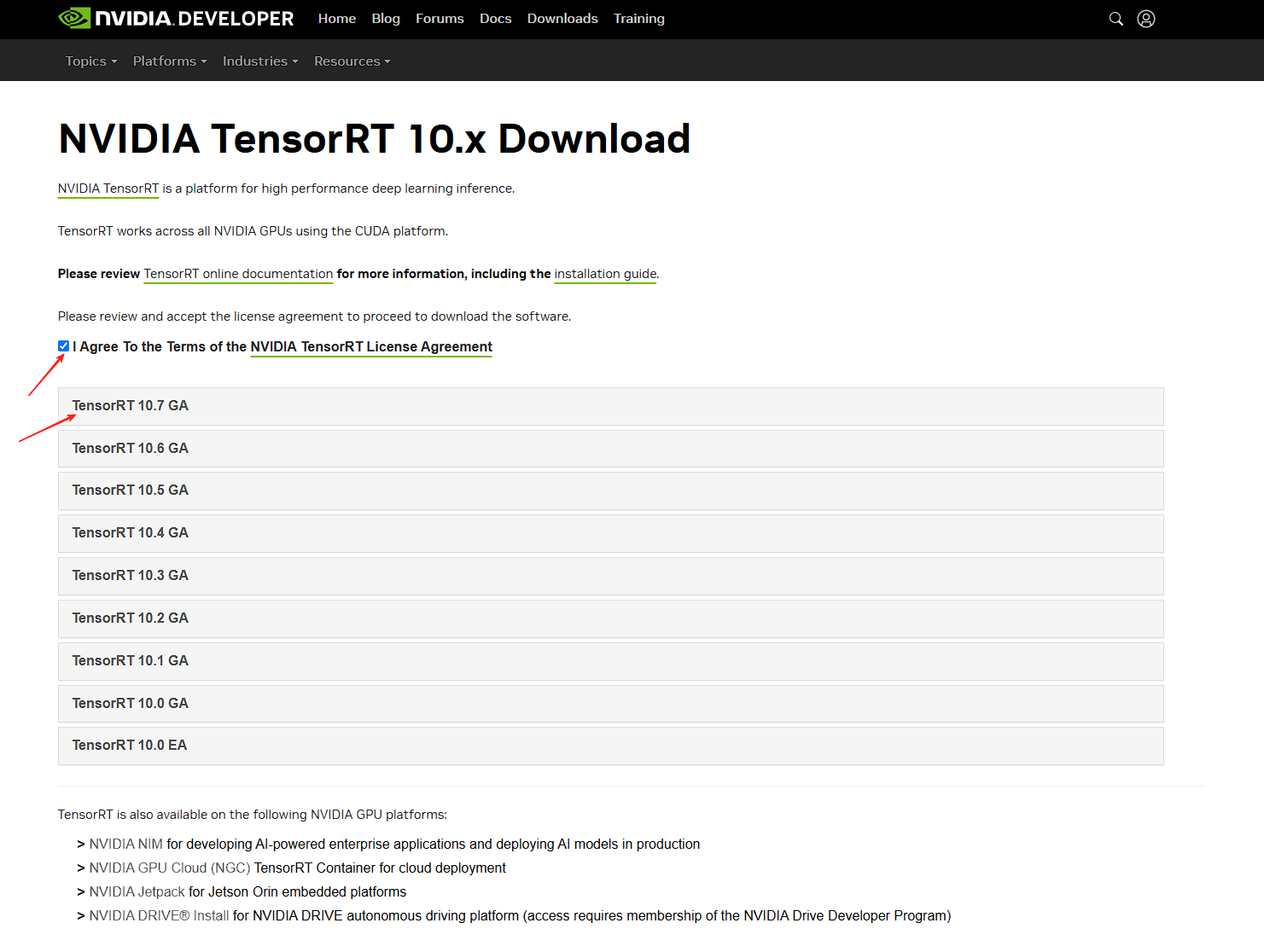

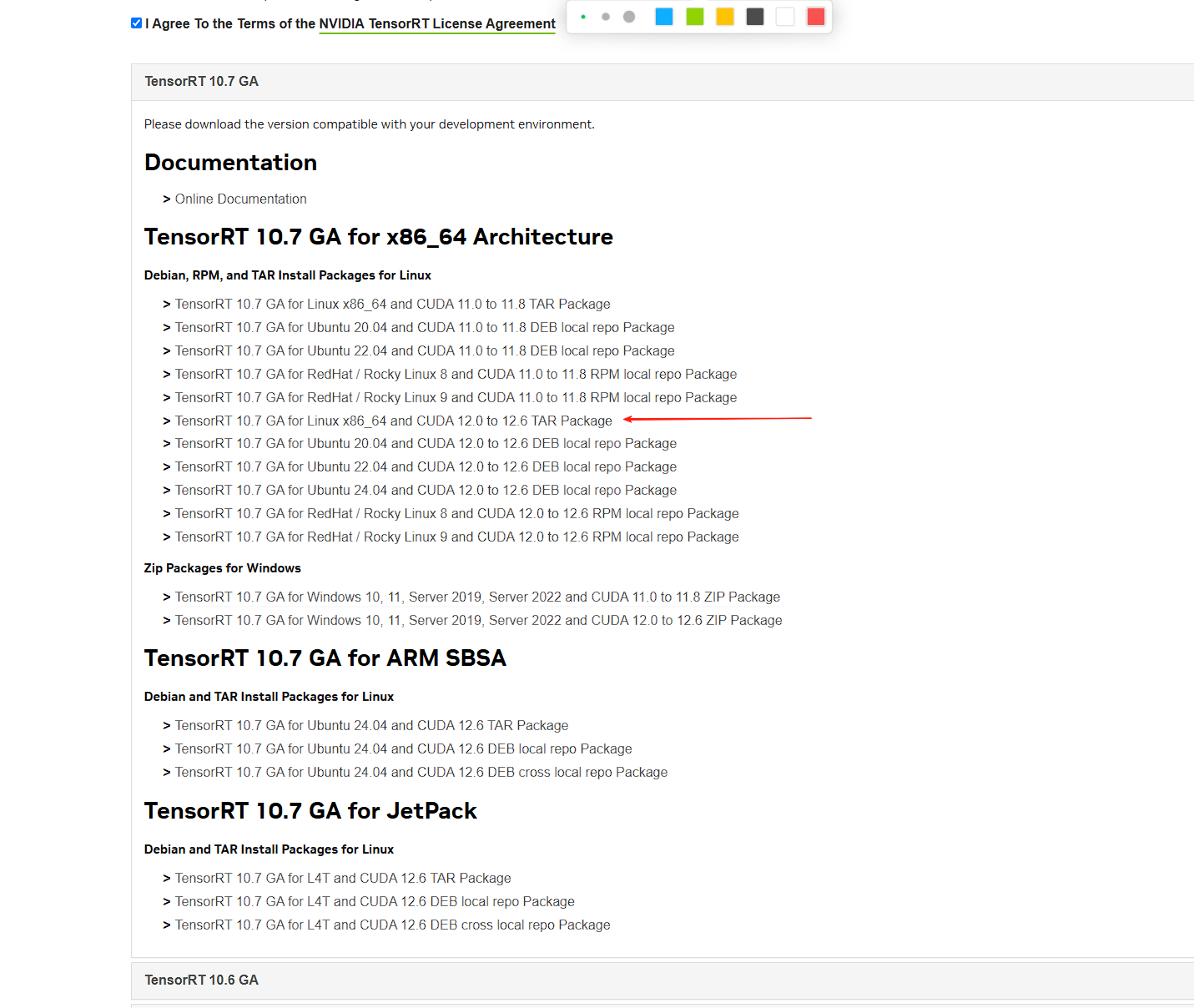

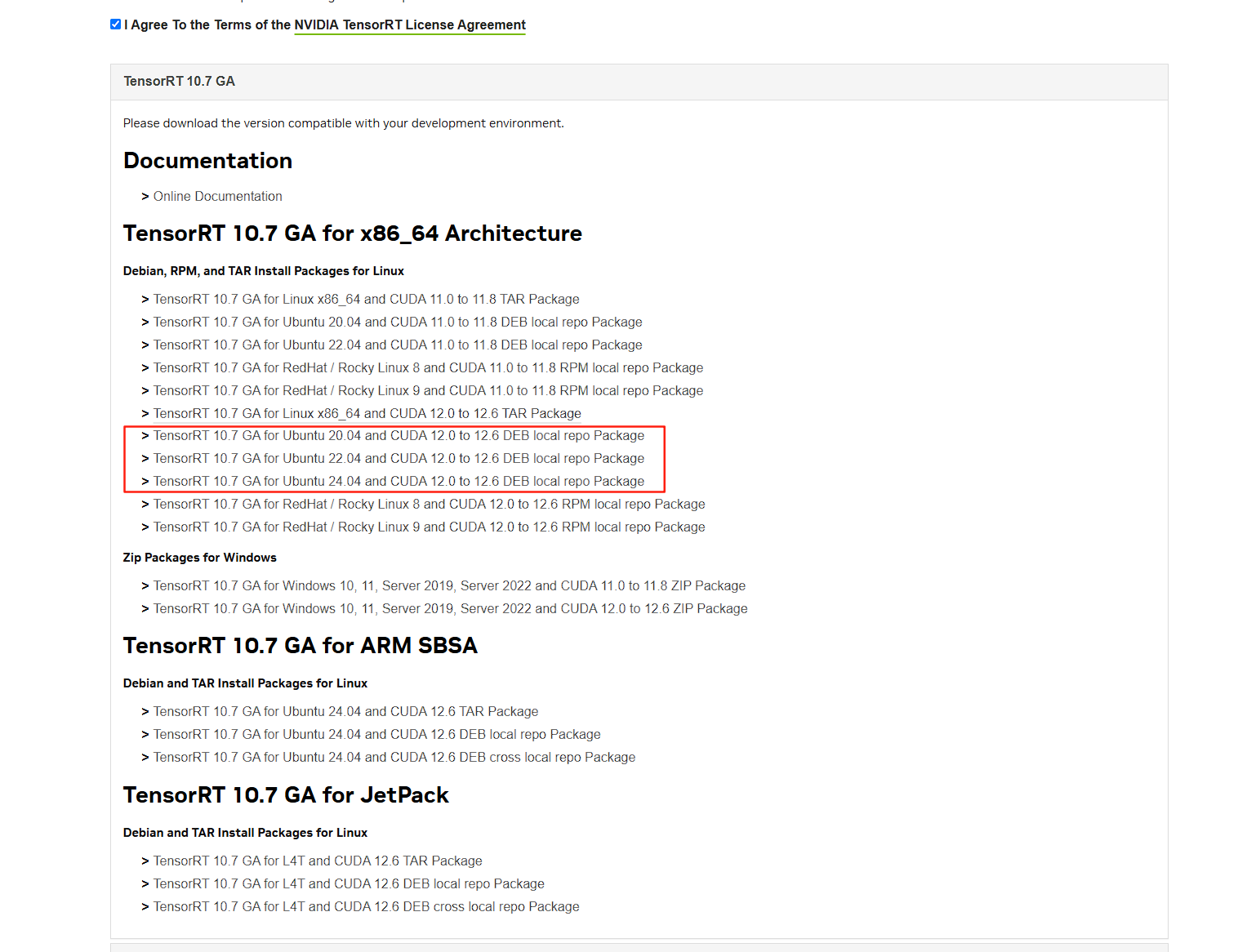

TensorRT

选择合适的版本,我这里使用最新版本

同意许可->选择版本->确认cuda版本->选择自己的指令集和操作系统

1 | # wget 下载地址修改为浏览器选择后右键复制的地址 |

** 配置项目环境 **

1 | # 激活虚拟环境后执行安装 |

1 | # wget 下载地址修改为浏览器选择后右键复制的地址 |

配置完成

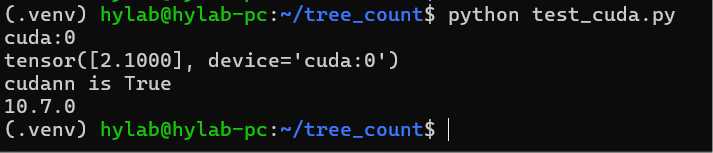

环境测试

1 | import torch |

- 标题: WSL2 环境下配置CUDA和CUDNN并部署TensorRT

- 作者: 菜企鹅

- 创建于 : 2024-12-22 12:00:00

- 更新于 : 2025-08-06 09:22:55

- 链接: https://blog.cybersafezone.top/2024/12/22/WSL2安装CUDA_CUDNN_TensorRT/

- 版权声明: 本文章采用 CC BY-NC-SA 4.0 进行许可。